Scrapoxy is an open source proxy orchestration tool that unifies multiple proxies behind one user-friendly endpoint. It started as a way to address IP rotation and ban detection for web scraping and security testing. Over time, it has evolved into a feature-packed solution that simplifies how you manage and scale proxies from different sources.

If your tasks involve rotating IPs for large-scale data gathering or keeping a failover system for network testing, Scrapoxy helps you configure, monitor, and adjust proxies without juggling multiple platforms.

Key Features

1. Centralized Proxy Management

Scrapoxy works as a “super proxy”. You point your scraper or application to Scrapoxy’s port, and it distributes requests across your connected proxies behind the scenes. This means:

- Less overhead: You don’t need to constantly update proxy lists or manage IP rotation in every tool you use.

- Consistent setup: All your tools use the same authentication details and request handling.

- Simple maintenance: If you change or add proxies, you do it once in Scrapoxy instead of updating each application.

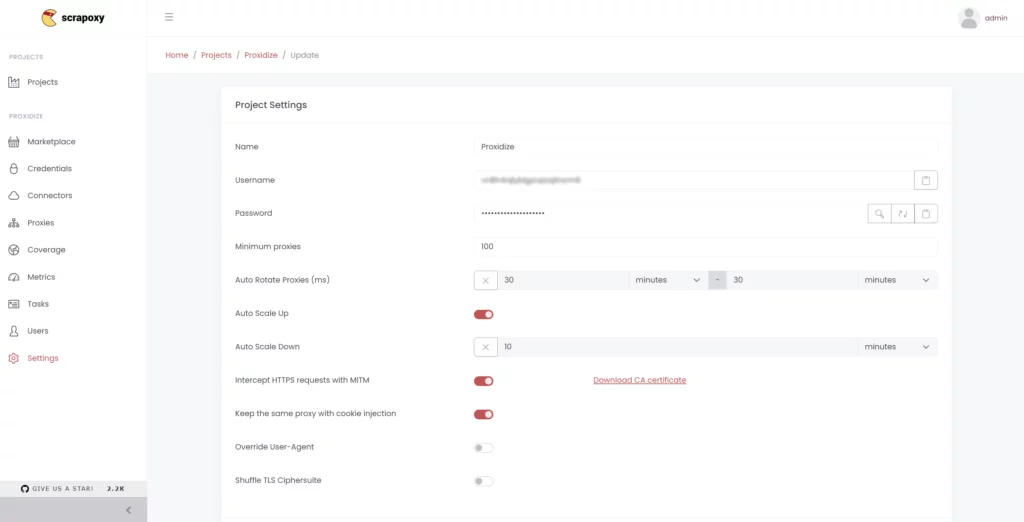

2. Automatic Scaling and Rotation

Scrapoxy automatically starts or stops proxies based on traffic patterns. This is especially useful when your workload spikes unexpectedly. Automatic actions include:

- Auto scale up: When demand rises, Scrapoxy can bring more proxies online.

- Auto scale down: When activity returns to normal, Scrapoxy stops unnecessary proxies to lower costs.

- Auto rotate: At set intervals or when certain conditions are met, Scrapoxy can swap out proxies to reduce the risk of IP blocks.

3. Ban Detection and Proxy Health Checks

If a proxy fails or gets blocked, Scrapoxy detects this and removes that proxy from the rotation. This ensures:

- Minimal downtime: Your scraping or testing won’t fail just because one proxy is offline.

- A continuous pool of working IPs: Scrapoxy seamlessly reroutes traffic to the healthiest proxies available.

- Simple re-entry: Once a banned or faulty proxy is back, Scrapoxy can add it to the rotation again.

4. Traffic Interception and MITM

Scrapoxy provides an optional man-in-the-middle (MITM) mode for scenarios that require deeper control:

- Debugging or testing: You can inspect requests and responses in real time to spot hidden redirects or examine cookies.

- Header injection: Scrapoxy can add tokens, rotate user agents, or standardize request headers.

- Advanced scraping: Handling JavaScript-heavy sites can call for direct traffic manipulation to manage sessions properly.

When MITM is enabled, Scrapoxy generates a certificate authority (CA). Installing it on your local machine or scraping environment removes any SSL warnings and gives you full access to decrypt HTTPS.

5. Session Stickiness and Cookie Injection

Some sites tie sessions to a specific IP. Scrapoxy’s sticky session feature lets you keep the same IP across multiple requests:

- Stable login flows: If a site expects you to stay on one IP after logging in, sticky sessions handle that seamlessly.

- Consistent browsing: Perfect for scripting or browser automation that depends on a single IP.

- A/B testing: If you need to simulate a user journey on a single IP, sticky sessions help maintain your user identity.

6. Dashboard and User Interface

Scrapoxy comes with a browser-based dashboard on port 8890. This interface shows your active proxies and usage data in real time. You can create projects, manage connectors, and review metrics at a glance:

- Projects: Each project can represent a separate environment or scraping job.

- Connectors and credentials: Store API keys or tokens for various providers. Then link them to connectors that manage proxy creation and removal.

- Visualization: The coverage map, logs, and analytics help you see data transfers, proxy uptime, and success rates in one place.

Architecture and Workflow

Now that we have looked at Scrapoxy’s main features, let’s see how they all fit together. Let’s explore Scrapoxy’s architecture and workflow to show how credentials, connectors, and projects each play a part in spinning up proxies, monitoring their status, and distributing traffic efficiently.

- Credentials: You begin by creating credentials in Scrapoxy, which store the authentication details you need to spin up proxies. This might be AWS credentials, GCP service account keys, or tokens for a dedicated proxy service.

- Connectors: Next, you create connectors that define how many proxies you want to run, timeouts, and how to remove offline proxies. Each connector ties to the credentials you previously saved.

- Projects: A project brings everything together. You specify how many proxies to keep online at minimum, the username and password clients need to authenticate with the super proxy, plus advanced features like sticky sessions, auto-scaling, or MITM.

- Usage: After your project is set, Scrapoxy exposes a single proxy endpoint on port 8888. Any traffic directed there (with the right username and password) automatically benefits from IP rotation, ban detection, and other capabilities you configured.

Use Cases

Web Scraping and Data Collection

Scrapoxy is popular for large-scale or long-running scrapers that need continuous IP rotation. If a site monitors for repeated requests from the same IP, Scrapoxy’s rotation helps you dodge bans and captchas. By enabling sticky sessions, you can stay logged in to a site for tasks like scraping private data or navigating multi-step forms.

Load Testing and QA

If you want to see how your website or API handles traffic from many different IP addresses or regions, Scrapoxy’s auto-scaling mode can start multiple proxy instances. This is useful for simulating real-world scenarios like global user access or peak load times.

Security Research and Pentesting

With MITM turned on, Scrapoxy can intercept both HTTP and HTTPS traffic, letting you modify headers, track cookies, or even swap out user agents. This unified approach to traffic manipulation can simplify certain pentesting or security audit tasks, since you only configure settings in one place.

Advanced Features

Coverage Map and Metrics

Scrapoxy’s interface provides a coverage map so you can see proxy geolocation data in real time. It also displays metrics such as:

- Requests per proxy and success rates

- Total bandwidth sent or received

- Proxy uptime and average request times

This level of insight helps you identify which proxies might be slow or repeatedly failing, and it can inform strategic choices about scaling or location targeting.

User-Agent Overrides and TLS Cipher Shuffling

Many websites look for browser or SSL certificate “fingerprints” that hint you might be automating. Scrapoxy helps you randomize user agents and shuffle TLS ciphers, making your requests appear more varied. This can reduce the likelihood of detection when scraping or testing.

Multiple Projects

Scrapoxy doesn’t limit you to one project. If you have different scraping campaigns or environments to manage, you can create separate projects. Each project can have its own connectors, scaling rules, and session settings, so your tasks stay neatly organized.

References

Scrapoxy GitHub

github.com/fabienvauchelles/scrapoxy

This is where you’ll find the official repo, its open-source code, and a wiki with additional configuration tips.

Docker Hub

https://hub.docker.com/r/scrapoxy/scrapoxy/tags

Prebuilt Docker images make it simple to get Scrapoxy running in a container, often in just one command.

MITM Explanation (OWASP)

https://owasp.org/www-community/attacks/Session_hijacking_attack

A general overview of how man-in-the-middle attacks and interception work, and why they matter in a testing context.

Conclusion

Scrapoxy provides a centralized, adaptable way to manage multiple proxies from multiple sources, helping you tackle common challenges like IP rotation, session management, and proxy health monitoring.

Key Takeaways:

- Efficient Management: Scrapoxy’s dashboard lets you configure, monitor, and rotate proxies in one place, saving you time when working on web scraping, load testing, or security research.

- Automatic Scaling and Rotation: The platform detects traffic spikes and adjusts proxy counts accordingly. It also rotates IPs on a schedule or condition you set, which can help avoid bans.

- Ban Detection and Recovery: Any offline or blocked proxies are automatically removed from rotation, preserving uptime and performance. Once a proxy is back online, Scrapoxy can restore it with minimal interruption.

- Advanced Controls: Features like session stickiness, HTTPS interception (MITM), and user-agent overrides offer deeper customization for cases where you need consistent logins or advanced debugging.

- Coverage and Insights: The coverage map and metrics dashboard give you real-time visibility into where your proxies are located, along with request success rates, bandwidth usage, and more.

By unifying everything behind a single endpoint and giving you the tools for auto-scaling and ban detection, Scrapoxy reduces the complexity of juggling separate proxies across different platforms or services.