In modern network infrastructure, load balancing plays a crucial role in ensuring the smooth and efficient operation of various services. This is especially true for proxy servers, which handle significant amounts of traffic and require efficient management to maintain performance and reliability. Load balancing helps distribute network traffic evenly across multiple servers, preventing any single server from becoming a bottleneck and ensuring optimal performance.

Load balancing is particularly important for proxy servers because it enhances their reliability, ensures high availability, and allows for scalable operations. By effectively distributing the load, load balancing helps maintain uninterrupted service, reduces the risk of server overload, and facilitates the seamless handling of increasing traffic loads.

This article will explain what load balancing is, why it is important for proxy servers, the types of load balancers available, some of the load balancing algorithms suited for proxy servers, and the benefits of effective load balancing in proxy servers.

What is Load Balancing?

Load balancing is the process of distributing incoming network traffic across multiple servers to ensure no single server bears too much load. The general purpose of load balancing is to optimize resource use, maximize throughput, minimize response time, and avoid overload on any single server, ensuring reliability and efficiency.

In short, load balancing directs the traffic coming into one’s servers to the server most suited to fulfilling the request so that no single server is overburdened. There are three types of network load balancers — hardware, software, and cloud-based. The load balancer uses an algorithm to decide where those requests are sent. This allows the user to tailor their load balancers to their specific requirements.

Why Load Balancing is Important for Proxy Servers

Load balancing directly impacts a proxy server’s performance, reliability, and scalability. By distributing traffic evenly across multiple proxy servers, load balancing enhances the overall performance, ensuring that no single server is overwhelmed and all servers operate efficiently.

Load balancing also helps maintain high availability and uptime for proxies. By managing traffic distribution and providing failover capabilities, network load balancers ensure that proxies remain available even if one or more servers fail. This guarantees that there is always a proxy available, ensuring uninterrupted service.

Furthermore, load balancing plays a vital role in scaling proxy services to handle increasing traffic loads. As the demand on one’s proxy servers grows, load balancers can efficiently distribute the increased traffic, allowing for seamless scalability without compromising performance or reliability.

Types of Load Balancers and Their Suitability for Proxy Servers

There are three main types of load balancers: hardware, software, and cloud load balancers. Each type has distinct characteristics and is suited to different environments based on the specific needs and resources of the proxy servers.

Hardware Load Balancers

Hardware load balancers are physical devices specifically designed for load balancing tasks. They offer high performance, reliability, and advanced features, making them suitable for environments with exceptionally high traffic volumes and strict performance requirements. Key benefits include optimized traffic distribution, enhanced security features, and robust failover mechanisms.

Hardware load balancers are deployed as dedicated devices within a network infrastructure. They are typically installed between the internet and the internal server farm, acting as a gateway that manages incoming traffic. These devices often come with redundant components to ensure high availability and reliability.

Hardware load balancers use specialized hardware components and optimized network interfaces to process and distribute network traffic efficiently.

Hardware load balancers generally involve higher initial costs compared to software and cloud load balancers due to the expense of the physical devices and the need for specialized hardware. They also require ongoing maintenance, which can add to the overall cost.

A hardware load balancer would be relevant to deploy in a large enterprise with significant traffic demands, such as a major financial institution or a high-traffic e-commerce platform. These environments require the high performance, reliability, and advanced security features that hardware load balancers provide.

Software Load Balancers

Software load balancers are applications that run on standard servers or virtual machines. They offer flexibility, ease of deployment, and cost-effectiveness. Key benefits include the ability to customize configurations, scalability, and integration with existing infrastructure.

Software load balancers can be deployed on any standard server or virtual machine, making them highly versatile. They are installed as software and can run in various environments, including on-premises data centers and cloud platforms. Their deployment can be easily scaled by adding more instances as needed.

Software load balancers are typically more cost-effective than hardware load balancers because they do not require dedicated physical devices. They leverage existing hardware, reducing the initial investment. However, they can still involve licensing costs and may require significant server resources.

A software load balancer would be relevant for small to medium-sized businesses or startups with moderate amounts of traffic. For example, a growing e-commerce business might use a software load balancer to manage traffic distribution across its web servers, benefiting from the flexibility and lower cost while still ensuring reliable performance.

Cloud Load Balancers

Cloud load balancers are managed services provided by cloud providers. They offer scalability, ease of management, and integration with other cloud services. Key benefits include automatic scaling, high availability, and reduced management overhead.

Cloud load balancers are deployed as part of a cloud provider’s infrastructure. Users configure and manage the load balancer through the provider’s management console or API. The cloud provider handles the underlying infrastructure, including maintenance and scaling.

Cloud load balancers use the cloud provider’s infrastructure to distribute traffic. They automatically adjust to changes in traffic load by scaling up or down as needed. These services typically offer a range of load balancing algorithms and advanced features like SSL termination and DDoS protection.

Cloud load balancers generally operate on a pay-as-you-go pricing model, which can be more cost-effective than hardware solutions for many organizations. They eliminate the need for upfront hardware investment and reduce ongoing maintenance costs. However, the total cost can vary based on usage and the specific features required.

A cloud load balancer would be relevant for organizations that have cloud infrastructure or need to scale quickly. For instance, a global streaming service using AWS or Google Cloud could deploy a cloud load balancer to manage traffic across multiple regions, ensuring low latency and high availability for users worldwide.

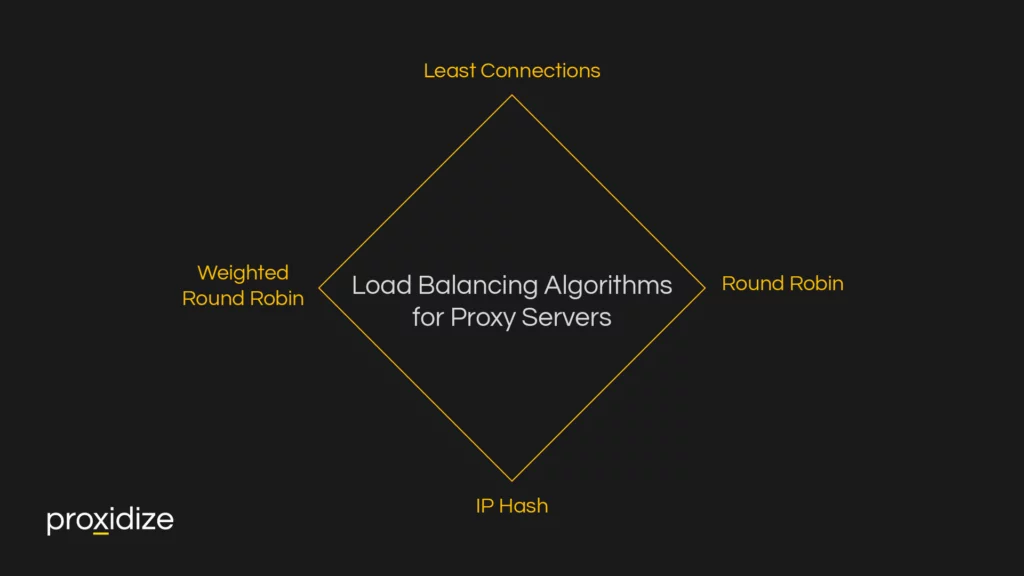

Load Balancing Algorithms for Proxy Servers

Load balancing algorithms determine how incoming traffic is distributed across multiple servers. The most relevant algorithms for proxy servers include:

Round Robin

Round Robin is a simple and commonly used load balancing algorithm that distributes incoming requests sequentially across a pool of servers. When a request is received, it is sent to the next server in line, so each server receives an equal number of requests over time.

This method is easy to implement and works well in environments where servers have similar capabilities and load handling capacities. However, it may not be optimal in scenarios where server performance varies significantly, as it does not consider the current load or capacity of each server.

Least Connections

The Least Connections algorithm directs traffic to the server with the fewest active connections at the time of the request. This approach helps balance the load more dynamically by considering the current workload on each server.

This makes it suitable for environments where traffic patterns are unpredictable or where servers have varying capacities. By continuously monitoring the number of active connections, Least Connections can effectively distribute traffic to prevent any single server from becoming overloaded.

Weighted Round Robin

Weighted Round Robin builds on the basic Round Robin algorithm by assigning a weight to each server based on its capacity or performance. Servers with higher weights get proportionally more requests.

This method allows for more fine-tuned control over traffic distribution, ensuring that more powerful servers handle a larger share of the load. Weighted Round Robin is particularly useful in cases where servers have different capabilities.

IP Hash

IP Hash is a load balancing algorithm that uses the client’s IP address to decide which server will handle the request. A hash function is applied to the client’s IP address to generate a hash value, which is then used to map the request to a specific server.

This approach ensures that the same client consistently connects to the same server, providing session persistence and maintaining a continuous user experience. IP Hash is beneficial for applications in which the server keeps information about a user’s previous activity (e.g. e-commerce shopping carts or webmail services).

Benefits of Load Balancing for Proxy Servers

- Enhanced Performance

- Increased Reliability

- Cost Efficiency

- Scalability

- Efficient Use of Resources

- Improved Security

- Consistent User Experience

Load balancing offers a variety of benefits that ensure no single server is overwhelmed and ensures your proxies are there when they’re needed. With efficient load balancing in place, new servers can be added and accounted for conveniently and maximizes the utilization of each one.

Load Balancers vs. Reverse Proxies

A reverse proxy server is an intermediary between users and back-end servers. When a client makes a request to the server, the reverse proxy forwards it to an appropriate server and then returns that response to the client. This hides the identity of the backend servers and acts as a network load balancer, alongside providing other services like SSL termination and caching.

Load balancers and reverse proxies overlap heavily; both can distribute incoming traffic across different servers to optimize resource utilization and advanced load balancers can be configured to incorporate many of the features of reverse proxies.

Where they differ is largely in primary function. Network load balancers are solely used to distribute traffic and prevent any one server from being overloaded. Reverse proxies by contrast are used mainly to hide backend servers from direct access. Many reverse proxies include load balancing as one of their core activities, which gives servers an additional level of security over dedicated load balancers.

Conclusion

Load balancing is a key component of network management, allowing one to make the best use of one’s servers by evenly distributing traffic across multiple servers. This is especially true for proxy servers, where efficiency, high reliability, and scalability are crucial.

This article has covered what load balancing is, its importance for proxy servers, and the various types of load balancers. It has also explored the most relevant load balancing algorithms, such as Round Robin, Least Connections, Weighted Round Robin, and IP Hash, explaining how they manage traffic distribution.

Furthermore, the article highlighted the benefits of load balancing for proxy servers, and the key differences between load balancers and reverse proxies.

Frequently Asked Questions

What is the minimum number of load balancers needed to configure active/active load balancing?

To configure active/active load balancing, a minimum of two load balancers is required. This setup allows traffic to be distributed across both load balancers, ensuring that if one fails, the other can continue to manage traffic without interruption.

What is adaptive load balancing?

Adaptive load balancing is a dynamic method of distributing network traffic across multiple servers based on real-time analysis of server load and performance metrics. This approach adjusts the traffic distribution as conditions change, ensuring optimal performance and resource utilization.

Which DNS record needs to be configured for a network load balancing cluster?

For a network load balancing cluster, an A (Address) record or a CNAME (Canonical Name) record needs to be configured in the DNS. These records point to the load balancer’s IP address or hostname, directing traffic to the cluster of servers behind the load balancer.